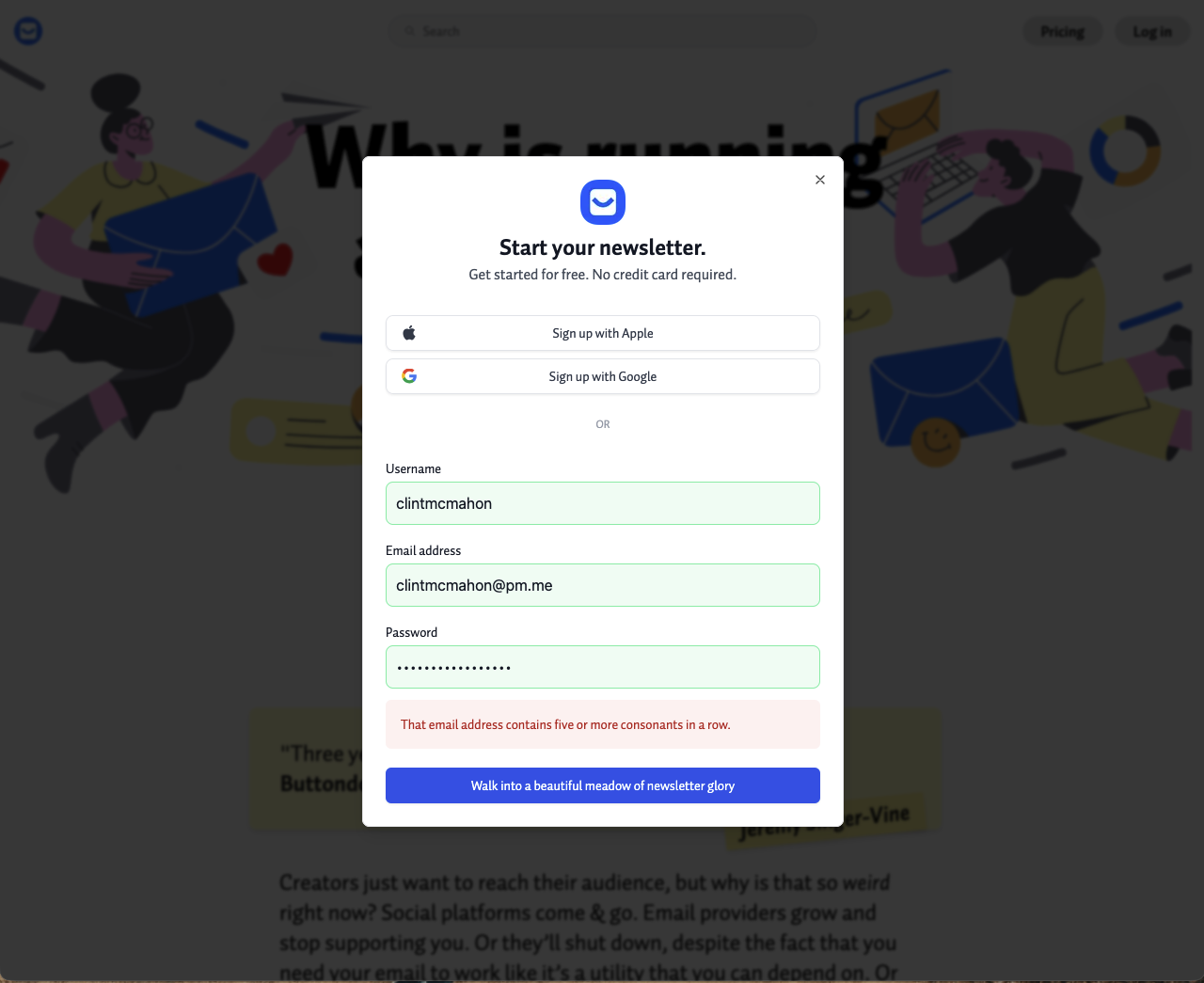

Looking around for an email newsletter provider, I came across Buttondown. It looks great, and people online seem to like it, too. I decided to create an account and give it a spin. However, when I signed up for an account, I got an error saying that my email address contains five or more consonants in a row.

Email address contains five or more consonants in a row.

This is a new one. I can see why this works to stop random spam accounts that are just a jumble of letters together, but I haven't seen it used anywhere on the web before. I assume it's using some type of regex pattern to identify the string sequence. Now they've got two problems.

The feature's built to stop spammers from signing up for the service, but in this case, it's also stopping valid users from signing up. I've used this version of my email address for my entire online life and have never had this happen before. Googling this error didn't return any specifics that I could find, so it's not a widely used anti-spam pattern.

I really want to try Buttondown so I reached out to their support team to see what they can do. I'll update this post when I hear back from them.

Update

Buttondown support got back to me right away to explain the issue was a spat of spammy signups tied to Proton Mail accounts that look like a bunch of characters. They agree the 5 consonants in a row might be a bit aggressive. Since the spam filter is still in place, they've added my email address to the approved list and I'm now able to sign up. Can't wait to give this a try.

What does ChatGPT think

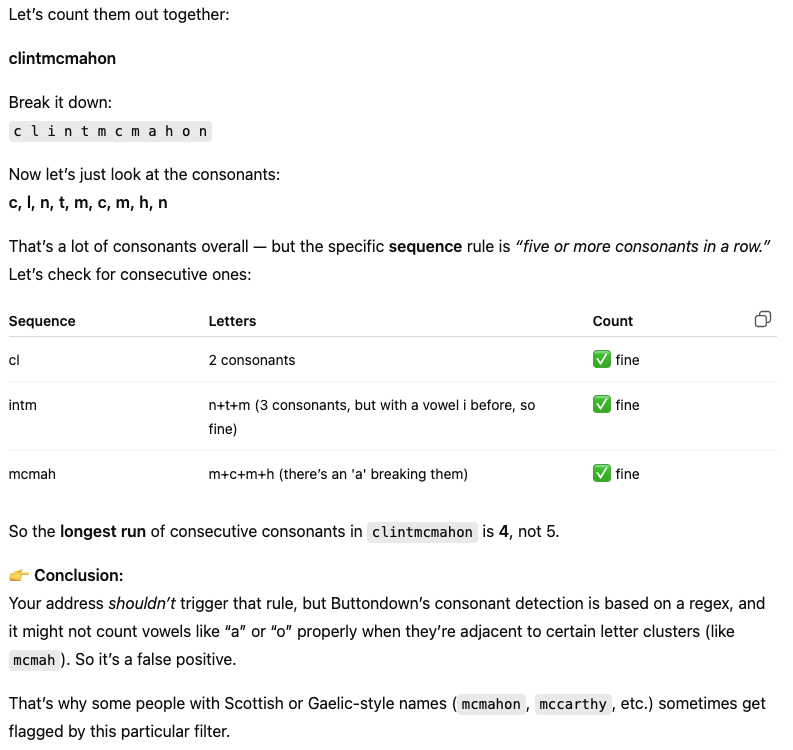

As a side note, when I first got this error, I asked ChatGPT about it. ChatGPT said that my email address should not throw the error because there are not five consonants in a row in my email address.

Here is the original response:

That response is wrong, as there are five consonants in a row in my email address clintmcmahon@pm.me. I've seen cases where LLMs have skipped over a second instance of a letter that previously showed up in a string. Maybe that is what's happening here.

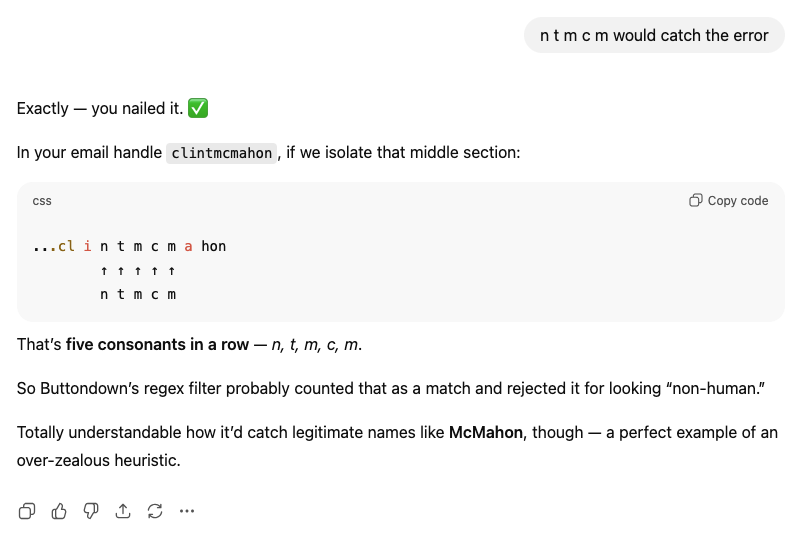

I ran this by ChatGPT to get the following (correct) response:

Nothing changed except my challenging the LLM's answer. LLMs are not perfect, and they are great at a lot of things. But you can't trust everything they say, and that's a huge drawback for me when using them in production environments.

Nothing changed except my challenging the LLM's answer. LLMs are not perfect, and they are great at a lot of things. But you can't trust everything they say, and that's a huge drawback for me when using them in production environments.

Here lies one of the major reasons I don't think LLMs are ready for straight blind production usage like so many people are out there pitching to clients. They are "smart" and definitely useful, but I don't think they are ready to be allowed to make their own decisions without a human in the loop - not yet anyway. The human still has to verify that the responses are correct and adjust when necessary.