How I completely destroyed a production database

This is a story about how I completely destroyed a production database, what I did to fix it and the lessons I learned that I carry with me today.

This is a story about how I completely destroyed a production database, what I did to fix it and the lessons I learned that I carry with me today.

My first job out of "college" was working as a developer assigned to maintain a third party financial application that managed stock options of clients of the large financial institution where I worked. It was Windows Forms based desktop app that installed on each of the individual user's desktop machine. The app connected to a shared SQL Server database via a trusted connection. This means users permissions were managed at the database level via their Windows credentials. But if you knew a valid SQL user and password you were able to change the connection string from a Trusted Connection to use a SQL Server user id and password.

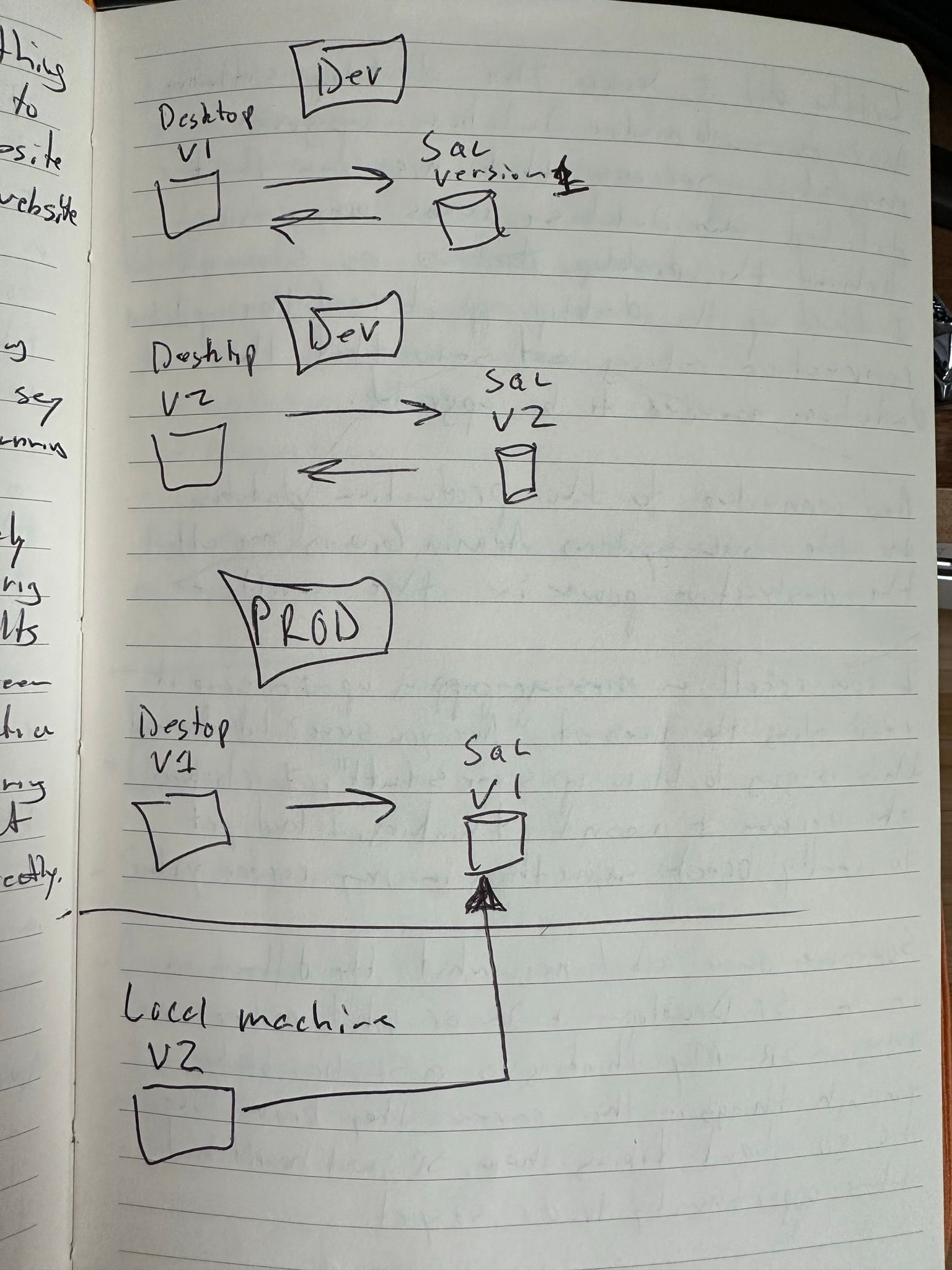

I was running two versions of the software on my local machine in preparation for a software update sometime in the future. Both versions of the software were pointing to their own DEV database on a DEV server. Each installation utilizing a trusted connection and not using SQL authentication to connect to the database. Part of the verification of the new system was to see how the data was returned - was version 1 returning the same data as version 2 kind of thing.

For some reason, and to this day I have no idea why or how this happened, but I wanted to compare production data. Without realizing it - or more accurately , without thinking - I updated the connection string of the new version of the app and pointed it to the production database. If I were connecting with my Windows account I don't think the next thing would have happened. However, I also made the fatal mistake of updating the connection string to use the SQL Server sa user and password.

A very detailed and highly technical diagram depicting the situation I found myself in:

Little did I know that the desktop software had an automatic database upgrade migration process that was executed if the program detected the database was any version behind the desktop version currently connecting to the database. As soon as I fired up the desktop app, the app read the updated connection string (SA!) and connected to the production database as the system administrator giving me all the destructive power I could handle. The application saw that the database was a previous version and therefore needed an upgrade.

I remember a message popping up that read something like "Are you sure you really want to do this action? It's definitely going to blow some stuff up". I clicked OK because i wasn't thinking or didn't realize I was pointing to the production database. I had yet to really break something in my young career.

Someone once asked me what the difference between a senior developer and a junior developer are. My thinking is that a senior developer has broken enough things in their career that they know how to go about getting them working again. The junior just hasn't had the opportunity yet.

After clicking OK I remember watching a modal describing what was happening - updating tables..., migrating database values... - and thinking "Oh, no! I've made a huge mistake".

I just authorized the new version of the software to update the existing production database to the new version. The new version of the software updated the table structure and stored procedures which resulted in a lot of data loss. Effectively making that database unusable to anyone running the old version - which at this point was everyone in the company.

Realizing what had happened I tried running the software version everyone else was running. It failed and I knew I was done. Since this was was early in the morning , nobody else was in the office so I just sat there alone thinking about what had happened. I'll never forget that lump of shame that just sat in my stomach and chest. This was my first major f'up of my career. I wasn't sure what was going to happen. Maybe I'd be fired and escorted out the door. My stomach was a pit of despair and my career was over just when it was getting started.

One of my favorite DJs is John Richards on KEXP who frequently reminds us that we're not alone. Luckily, I was not alone in this little screw up either. Sometimes it does feel like we are alone, but if we look hard enough we will see that we are not alone. I was working with a great senior engineer who would go on to be one of my mentors. A person who taught me a lot about the working world as well about software development. As the emails and phone calls started to come in from users who are unable to use the application, I went over to this person and put it all out.

From start to finish I explained the situation. What I was doing, trying to do, what happened, etc. He just got up, said "OK, let's go fix this." and we were off to the data center. That was it. I really thought he was going to unload on me, but he didn't. Apparently, and after 20 years I've learned this many times over, but everyone screws up and the majority of the time we can fix the problem. So, we simply walked over to the data center and talked to the DBAs, explained the situation and within 20 minutes they had restored the previous night's database back up. Done.

All the data was restored, the tables put back to their original state and all was good again. Since there were a good amount of users who couldn't access their data in the morning, we needed to tell our bosses and get things in the open and explain the root cause. I was really afraid of their reaction and had no idea how they were going to take the news. But it turns out they were pretty understanding of the whole thing. Some more than others, but the ones we were less than understanding didn't make for good managers anyway.

I've been meaning to put this story into words for a while now. Partially just to tell the story but also as a reference for anyone else out there who has also completely destoyed something. You're not alone.

What I Learned

This experience, as brutal as it was then, has really taught me a lot and helped me in my programming career. Here are some things that I've learned and carried with my through my career.

- I should never have had access to the SA password on a production database and therefore never had that kind of power. As a developer for one but definitely not a developer with only a year of experience. Now when I get frustrated by overly secure DBAs I can understand why and respect the authority. In all my projects now I make sure that we don't have any write permissions against the production database unless it's absolutely necessary. It makes life a little more difficult, but this policy makes sure there is no way we're overwriting a production database either.

- Double check everything. Like really take the time to look both ways on a one way street. Just because somethign isn't suppose to happen or you don't think it could happen, doesn't mean that the thing won't. Trying to be patient and think through actions that you're taking.

- Honesty and owning up to your mistakes. Part of being a consultant is taking the blame for things. Sometimes even when they are not directly your fault.

The main lesson here is that nobody should ever give a 20 year old with little experience the SA password to a production database and trust that things are going to be alright.